Hierarchical Cluster Analysis

Discover the Hierarchical Cluster Analysis in SPSS! Learn how to perform, understand SPSS output, and report results in APA style. Check out this simple, easy-to-follow guide below for a quick read!

Struggling with the Hierarchical Cluster Analysis in SPSS? We’re here to help. We offer comprehensive assistance to students, covering assignments, dissertations, research, and more. Request Quote Now!

1. Introduction

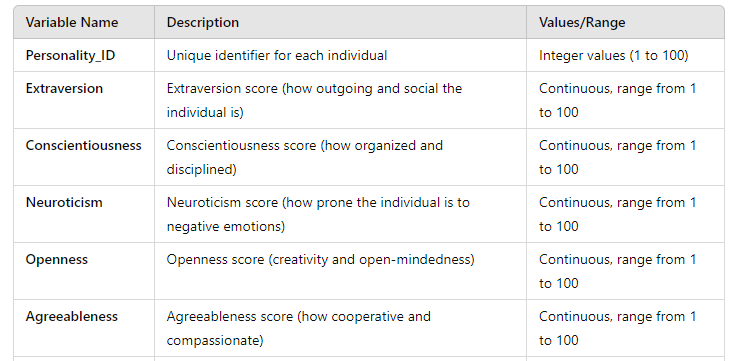

9. How to Perform Hierarchical Cluster Analysis in SPSS

Step by Step: Running Hierarchical Cluster Analysis in SPSS Statistics

Let’s embark on a step-by-step guide on performing the Hierarchical Cluster Analysis using SPSS

- STEP: Load Data into SPSS

Commence by launching SPSS and loading your dataset, which should encompass the variables of interest – a categorical independent variable. If your data is not already in SPSS format, you can import it by navigating to File > Open > Data and selecting your data file.

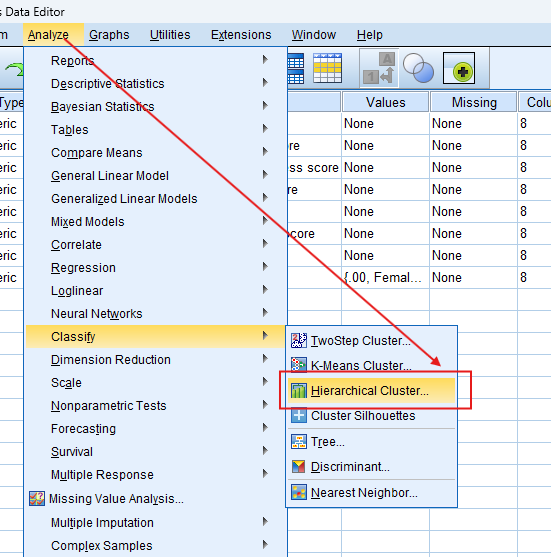

- STEP: Access the Analyze Menu

In the top menu, Go to Analyze > Classify > Hierarchical Cluster.

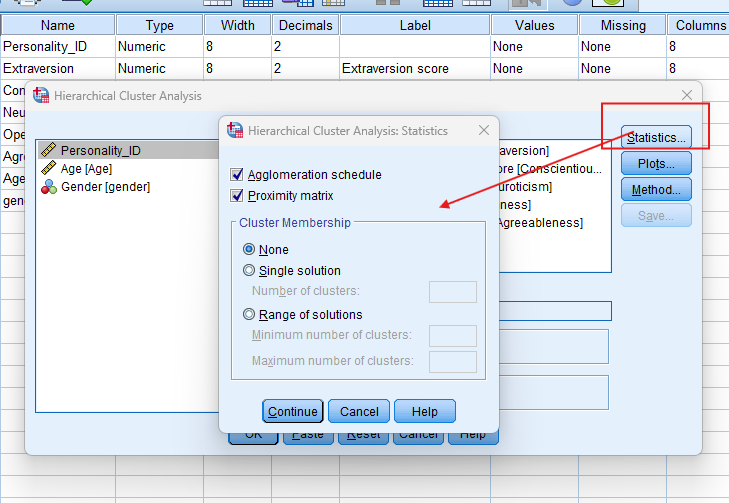

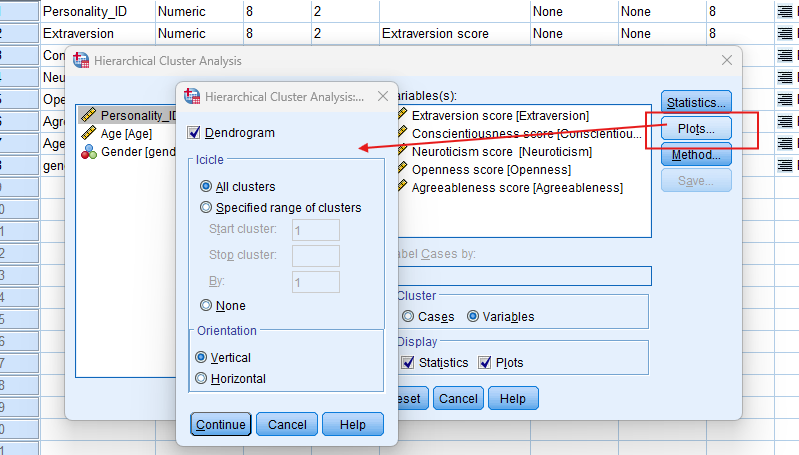

- STEP: Specify Variables

- Select your variables: Move continuous variables (e.g., personality traits) into the analysis.

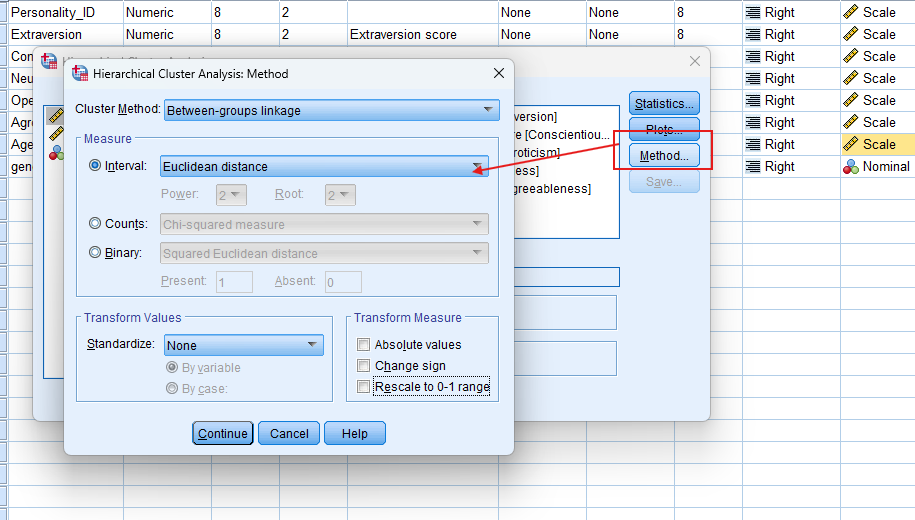

- In the “Cluster Method” tab, select your preferred method, such as Between-groups Linkage or Nearest Neighbor.

- In the “Measure” tab, choose a distance measure like Euclidean Distance or Pearson Correlation.

- STEP: Generate SPSS Output

- Click ‘OK’ after selecting your variables and method. SPSS will run the analysis and generate output tables and survival curves.

Note: Conducting Hierarchical Cluster Analysis in SPSS provides a robust foundation for understanding the key features of your data. Always ensure that you consult the documentation corresponding to your SPSS version, as steps might slightly differ based on the software version in use. This guide is tailored for SPSS version 25, and for any variations, it’s recommended to refer to the software’s documentation for accurate and updated instructions.

10. SPSS Output for Hierarchical Cluster Analysis

11. How to Interpret SPSS Output of Hierarchical Cluster Analysis

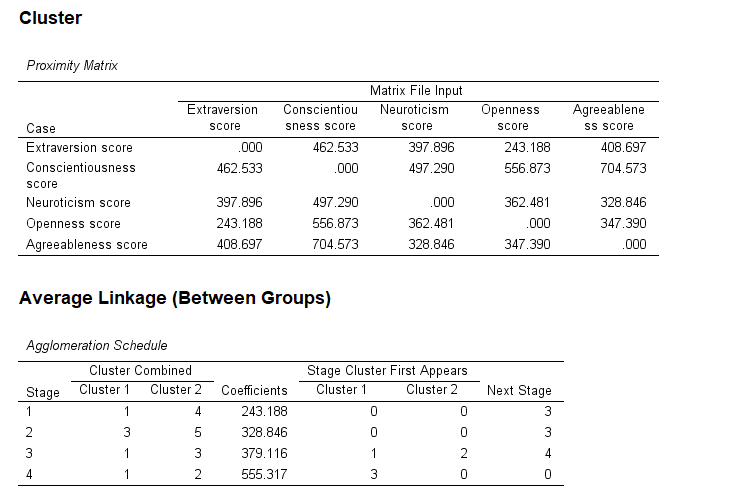

SPSS will generate output, including Case processing summary, proximity matrix, Agglomeration schedule, dendrogram plot

- Dendrogram: Displays the hierarchy of clusters, showing how cases or variables merge step by step.

- Agglomeration Schedule: Shows the distance or similarity at which clusters are merged.

- Cluster Membership: Provides a list of cases and the cluster they belong to based on the selected cutoff point in the dendrogram.

- Cluster Sizes: Lists the number of cases in each cluster to evaluate the balance between clusters.

12. How to Report Results of Hierarchical Cluster Analysis in APA

Reporting the results of Hierarchical Cluster Analysis in APA (American Psychological Association) format requires a structured presentation. Here’s a step-by-step guide in list format:

- Introduction: Briefly describe the purpose of the analysis and the theoretical background.

- Method: Detail the data collection process, variables used, and the model specified.

- Results: Present the parameter estimates with their standard errors, and significance levels.

- Figures and Tables: Include relevant plots and tables, ensuring they are properly labelled and referenced.

- Discussion: Interpret the results, highlighting the significance of the findings and their implications.

- Conclusion: Summarise the main points and suggest potential areas for further research.

Get Support For Your SPSS Data Analysis

Embark on a seamless research journey with SPSSAnalysis.com, where our dedicated team provides expert data analysis assistance for students, academicians, and individuals. We ensure your research is elevated with precision. Explore our pages;

- Statistical Advisory Service (SAS)

- Statistics Consultancy Service (SCS)

- Academic Statistical Analysis

- Hire a Dissertation Statistician

- Clinical Research Data Analysis

- Medical Data Analysis Expert

- Biostatistics Consulting

- Hire an Independent Statistician

- Help With Statistics (For PhD, For Dissertation, For Thesis, for DNP Project)

- Statistical Analysis Help for Doctorate of Nursing Practice

- SPSS Data Analysis Help for DNP

- Data Analysis – SPSS Help for Students

- SPSS Help by Subjects Area: Psychology, Sociology, Nursing, Education, Medical, Healthcare, Epidemiology, Marketing

Connect with us at SPSSAnalysis.com to empower your research endeavors and achieve impactful data analysis results. Get a FREE Quote Today!