Binary Logistic Regression in SPSS

Discover Binary Logistic Regression in SPSS! Learn how to perform, understand SPSS output, and report results in APA style. Check out this simple, easy-to-follow guide below for a quick read!

Struggling with the Logistic Regression in SPSS? We’re here to help. We offer comprehensive assistance to students, covering assignments, dissertations, research, and more. Request Quote Now!

Introduction

Welcome to an in-depth exploration of Binary Logistic Regression in SPSS, a powerful statistical technique that unlocks insights in various fields, from healthcare to marketing. In this blog post, we’ll navigate the intricacies of binary logistic regression, providing you with a comprehensive understanding of its applications, assumptions, and practical implementation. Whether you’re a student or a novice in statistical modeling, this guide will equip you with the knowledge and skills to leverage binary logistic regression effectively.

Types of Logistic Regression

Before delving into binary logistic regression, let’s take a moment to explore the broader landscape of logistic regression. There are three primary types: Binomial Logistic Regression, Multinomial Logistic Regression, and Ordinal Logistic Regression.

- Binomial Logistic Regression deals with binary outcomes, where the dependent variable has only two possible categories, such as yes/no or pass/fail.

- Multinomial Logistic Regression comes into play when the dependent variable has more than two unordered categories, allowing us to predict which category a case is likely to fall into.

- Ordinal Logistic Regression is employed when the dependent variable has multiple ordered categories, like low, medium, and high, enabling us to predict the likelihood of a case falling into or above a specific category.

In this post, our focus will be on Binary Logistic Regression, which is widely used for binary outcomes and forms the foundation for understanding logistic regression.

Definition: Binary Logistic Regression

Binary Logistic Regression is a statistical method that deals with predicting binary outcomes, making it an invaluable tool in various fields, including healthcare, finance, and social sciences. In binary logistic regression, the dependent variable is categorical with only two possible outcomes, often coded as 0 and 1. This technique allows us to model and understand the relationship between one or more independent variables and the probability of an event occurring or not occurring.

Logistic Regression Equation

At the core of Binary Logistic Regression lies the Logistic Regression Equation, which is vital for understanding the relationship between the predictor variables and the binary outcome. The equation can be expressed as follows:

ln (p/p-1)( = b_0 + b_1X_1 + b_2X_2 + …..+ b_kX_k \]

Where;

- p represents the probability of the binary outcome (e.g., success or failure).

- b_0 is the intercept term.

- b_1, b_2, …., b_k are the coefficients for the independent variables ( X_1, X_2, …, X_k ).

- ln denotes the natural logarithm.

Coefficients and Odds ratio

The coefficients ( b_1, b_2, …., b_k ) represent the slope of the relationship between each predictor variable and the log odds of the binary outcome. The odds ratio, which can be calculated as ( exp(b_i) ), helps in interpreting the impact of each predictor. An odds ratio greater than 1 indicates that an increase in the predictor variable is associated with higher odds of the event occurring, while an odds ratio less than 1 implies lower odds. Understanding this equation and the concept of odds ratios is fundamental for grasping the mechanics of binary logistic regression.

Logistic Regression Classification

In Binary Logistic Regression, classification plays a crucial role. Classification involves assigning cases to one of the two categories based on their predicted probabilities of belonging to a particular class. Typically, a threshold probability of 0.5 is used, where cases with predicted probabilities greater than or equal to 0.5 are classified into one category, and those with predicted probabilities less than 0.5 are assigned to the other category. However, the choice of the threshold can be adjusted depending on the specific needs and priorities of the analysis.

Binary Logistic Regression not only predicts the outcome but also provides insights into the factors that influence the outcome. By examining the coefficients and odds ratios associated with each predictor variable, analysts can identify the significance and direction of these influences. This information is invaluable for decision-making and understanding the driving forces behind binary outcomes in various scenarios, such as predicting customer churn, diagnosing medical conditions, or assessing the likelihood of loan default.

Assumptions of Binary Logistic Regression

Before diving into the practical aspects of Binary Logistic Regression, it’s essential to be aware of the assumptions that underlie the reliability of this technique. These assumptions include:

- Independence of Observations: The observations (cases) in the dataset should be independent of each other, meaning that the outcome of one case should not influence the outcome of another.

- Linearity of the Logit: The relationship between the predictor variables and the log odds of the binary outcome should be linear. It is often verified through methods like the Box-Tidwell procedure.

- Multicollinearity: There should be no high multicollinearity among the independent variables, which could lead to unstable coefficient estimates.

- Absence of Outliers: Outliers, if present, should be minimal, as they can influence the estimation of coefficients and model fit.

- Adequate Sample Size: The sample size should be sufficiently large to ensure the stability and validity of the logistic regression model. Some guidelines suggest a minimum of 10-20 cases per predictor variable.

Adhering to these assumptions is critical to ensure the reliability and validity of the results obtained from Binary Logistic Regression.

Hypothesis of Binary Logistic Regression

In Binary Logistic Regression, hypotheses guide the analysis and the interpretation of results. Specifically, two hypotheses are central to binary logistic regression:

- Null Hypothesis (H0): there is no significant relationship between the independent variables and the binary outcome.

- Alternative Hypothesis (H1): At least one of the independent variables has a significant effect on the binary outcome.

Hypothesis testing in binary logistic regression involves examining the significance of the coefficients associated with each independent variable. If any coefficient has a p-value less than the chosen significance level (commonly 0.05), it implies that the corresponding variable has a significant effect on the outcome. Hypothesis testing is a critical step in determining which predictor variables contribute significantly to the model and understanding their impact on the binary outcome.

An Example of Binary Logistic Regression

To illustrate binary logistic regression in action, consider a medical study aiming to predict whether patients are at a high risk of developing a particular medical condition based on their age, cholesterol level, and blood pressure. The binary outcome is whether the patient develops the condition (coded as 1) or not (coded as 0).

In this example, binary logistic regression would enable us to examine how age, cholesterol level, and blood pressure collectively influence the likelihood of developing the medical condition. By analyzing the coefficients and odds ratios associated with each predictor, we can identify which factors are significant predictors of the condition and quantify their impact. This practical application demonstrates how binary logistic regression is employed to make predictions and inform decision-making in real-world scenarios.

Step by Step: Running Logistic Regression in SPSS Statistics

Now, let’s delve into the step-by-step process of conducting the Binary Logistic Regression using SPSS Statistics. Here’s a step-by-step guide on how to perform a Binary Logistic Regression in SPSS:

- STEP: Load Data into SPSS

Commence by launching SPSS and loading your dataset, which should encompass the variables of interest – a categorical independent variable. If your data is not already in SPSS format, you can import it by navigating to File > Open > Data and selecting your data file.

- STEP: Access the Analyze Menu

In the top menu, locate and click on “Analyze.” Within the “Analyze” menu, navigate to “Regression” and choose ” Linear” Analyze > Regression> Binary Logistic

- STEP: Choose Variables

In the Binary Logistic Regression dialog box, move the binary outcome variable into the “Dependent” box and the predictor variables into the “Block” box. (if you have categorical variables, please indicate them in “Categorical” )

Click “Options” and check “Hosmer-Lemeshow goodness – of – fit”, “CI for exp (B)” and “Correlation of estimates”

- STEP: Generate SPSS Output

Once you have specified your variables and chosen options, click the “OK” button to perform the analysis. SPSS will generate a comprehensive output, including the requested frequency table and chart for your dataset.

Executing these steps initiates the Binary Logistic Regression in SPSS, allowing researchers to assess the impact of the teaching method on students’ test scores while considering the repeated measures. In the next section, we will delve into the interpretation of SPSS output for Binary Logistic Regression.

Note

Conducting a Binary Logistic Regression in SPSS provides a robust foundation for understanding the key features of your data. Always ensure that you consult the documentation corresponding to your SPSS version, as steps might slightly differ based on the software version in use. This guide is tailored for SPSS version 25, and any variations, it’s recommended to refer to the software’s documentation for accurate and updated instructions.

How to Interpret SPSS Output of Binary Logistic Regression

Interpreting the SPSS output of binary logistic regression involves examining key tables to understand the model’s performance and the significance of predictor variables. Here are the essential tables to focus on:

Model Summary Table

The Model Summary table provides information about the overall fit of the model. Look at the Cox & Snell R Square and Nagelkerke R Square values. Higher values indicate better model fit.

Variables not in the Equation Table

This table lists predictor variables that were not included in the final model. It can be helpful in understanding which variables did not contribute significantly to the prediction.

Omnibus Tests of Model Coefficients

This table presents a Chi-Square test for the overall significance of the model. A significant p-value suggests that at least one predictor variable is significantly associated with the binary outcome.

Hosmer and Lemeshow Test

The Hosmer and Lemeshow Test assesses the goodness of fit of the model. A non-significant p-value indicates that the model fits the data well. However, a significant p-value suggests that there may be issues with the model’s fit.

Classification Table

The Classification Table displays the accuracy of the model in classifying cases into their respective categories. It includes information on true positives, true negatives, false positives, and false negatives. The overall classification accuracy percentage indicates how well the model predicts the binary outcome.

Variables in the Equation Table

This table lists the predictor variables included in the final model, along with their coefficients (Bs) and significance levels.

- Coefficients (B) represent the impact of each predictor on the log odds of the binary outcome.

- Exp (B): Odds ratios, which can be calculated as Exp (B) indicate the change in odds for a one-unit change in the predictor. An odds ratio greater than 1 suggests an increase in the odds of the event occurring, while a value less than 1 implies a decrease.

- Wald Statistics Wald statistics values for each predictor variable. These values help assess the significance of each predictor. Lower p-values indicate a more significant impact on the outcome.

- t-values: Indicate how many standard errors the coefficients are from zero. Higher absolute t-values suggest greater significance.

- P values: Test the null hypothesis that the corresponding coefficient is equal to zero. A low p-value suggests that the predictors are significantly related to the dependent variable.

By thoroughly examining these output tables, you can gain a comprehensive understanding of the binary logistic regression model’s performance and the significance of predictor variables. This information is essential for making informed decisions and drawing meaningful conclusions from your analysis.

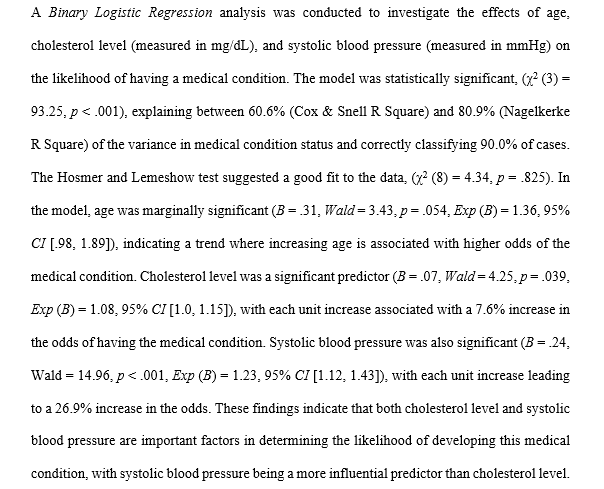

How to Report Results of Binary Logistic Regression in APA

Reporting the results of binary logistic regression in APA (American Psychological Association) format requires a structured presentation. Here’s a step-by-step guide in list format:

- Introduction: Begin by introducing the purpose of the binary logistic regression analysis and the specific research question or hypothesis you aimed to address.

- Assumption Check: Briefly mention the verification of assumptions, ensuring the robustness of the binary logistic regression analysis.

- Model Specification: Clearly state the outcome variable (e.g., “The dependent variable, ‘Binary Outcome,’ represented whether…”), and list the predictor variables included in the analysis.

- Model Fit: Report the model fit statistics. Include the Cox & Snell R Square and Nagelkerke R Square values to assess how well the model explains the variation in the binary outcome.

- Predictor Variables: Present a summary of the significant predictor variables included in the final model. Include the variable names, coefficients (Bs), and odds ratios with confidence intervals (e.g., “Variable A (B = 0.543, Odds Ratio = 1.721, 95% CI [1.243, 2.377])”)

Goodness of Fit

- Discuss the Hosmer and Lemeshow Test results to assess the goodness of fit. Mention whether the model fits the data well or if there are potential issues (e.g., “The Hosmer and Lemeshow Test indicated a good fit, χ²(7) = 6.245, p = 0.512”).

- Classification Accuracy: Provide information from the Classification Table, such as the overall classification accuracy percentage and the number of true positives, true negatives, false positives, and false negatives.

- Overall Model Significance: Indicate whether the overall model was statistically significant using the Omnibus Tests of Model Coefficients. Include the Chi-Square value and associated p-value (e.g., “The model was statistically significant, χ²(3) = 12.456, p < 0.001”).

- Hypothesis Testing: Address the research hypotheses by discussing the significance of each predictor variable. Emphasize whether they are associated with the binary outcome based on their p-values.

- Practical Implications: Conclude by discussing the practical significance of the findings and how they contribute to the broader understanding of the phenomenon under investigation.

Get Help For Your SPSS Analysis

Embark on a seamless research journey with SPSSAnalysis.com, where our dedicated team provides expert data analysis assistance for students, academicians, and individuals. We ensure your research is elevated with precision. Explore our pages;

- Biostatistical Modeling Expert

- Statistical Methods for Clinical Studies

- Epidemiological Data Analysis

- Biostatistical Support for Researchers

- Clinical Research Data Analysis

- Medical Data Analysis Expert

- Biostatistics Consulting

- Healthcare Data Statistics Consultant

- SPSS Data Analysis Help – SPSS Helper,

- Quantitative Analysis Help,

- Qualitative Analysis Help,

- SPSS Dissertation Analysis Help,

- Dissertation Statistics Help,

- Statistical Analysis Help,

- Medical Data Analysis Help.

Connect with us at SPSSAnalysis.com to empower your research endeavors and achieve impactful results. Get a Free Quote Today!